This posting is a follow-up on a previous posting in which I explained business process models can be drilled down to user-goal level use cases and how to do service discovery based upon process models.

In this third posting, I will show how the requirements that have been captured so far, can be transformed into an analysis model that will provide the base to build the design on.

AnalysisWhat kind of artifacts should be created during the Analysis process depends on the nature of the use cases and the architecture that is going to be used to implement them. The most apparent candidates for adding detail are activity or sequence diagrams, class diagrams and (where useful) collaboration diagrams (used to describe how various “components” work together). For SOA projects also message descriptions and message transformations are obvious candidates.

For services that support more than one operation you also need to specify the names of each individual operation, as well as the types of their iand output messages.

Depending on its granularity, a service supports a summary use case (like the Parking Permit process), a user goal use case (like Apply for Parking Permit) or a subfunction use case. No example of the latter has been included, but you can imagine that for each separate channel (letter, email, SMS) a subfunction use case could be created that describes a generic notification services that can be used to send all kind of notifications to third parties.

Unless parallel development is be done where other (parts of the) system(s) depend on a service to be build, at this point there is not much value in specifying the exact WSDL of the service. For any external service you use, you need to have at least the WSDL location if not the WSDL itself.

Activity DiagramsWhen there is a flow involved, it might be useful to create an activity diagram. An obvious example would be a use case for a service that will be implemented as a BPEL process. As already noted, the activity diagram for the Validate Parking Application is an example of that. But use cases that involve a user interface with a complex screen flow, also are good candidates.

Class DiagramsWhen there is data involved, a class diagram seems to be the obvious choice to document that. Many people doing SOA projects never seem to make a class diagram. Realize though that class diagrams add as much value to SOA projects as they for example do to pure Java/XML projects, as messages are about handling data as well. Having class diagrams available can add great value to optimizing the process of defining message formats and transformations.

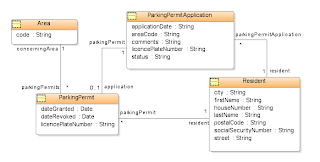

When analyzing the Parking Permit use case the following classes can be recognized: Resident, Parking Permit Application, and Parking Permit. A distinction has been made between Parking Permit Application and Parking Permit, as not all applications will result in a permit, and the application follows a process with statuses that do not apply to the permit itself and vise verse.

Not very explicit in the description but obviously needed if you think about it, is a notion of an Area. You will need this in the process of determining if there are sufficient parking lots available. You might argue that the reason for not having discovered this earlier is because the way the waiting list is being processed has not been worked out to the proper level of detail.

The class diagram for the Parking Permit use case could look as in the following figure:

Message Descriptions

What format to use for a message descriptions probably foremost depends on who needs to validate it. Some people will prefer more “logical” descriptions in Word, while more technical oriented people probably have no problems with XSD’s.

Mind that to be able to create XSD’s that translate 1:1 to an implementation, it is important to understand the technique of implementing messaging. For example, initially one XSD has been created for the Resident and one for the Parking Permit Application, only to discover that it was more practical to create one XSD containing both and use that as the format for the message going from the resident to the Parking Process service. If you pay more attention to detailing the Apply for ParkingPermit use case, you probably would find that out up front.

For the sake of example the response message has been kept simple by returning a string stating that the application has been received successfully. The description of the request message looks as follows:

Other message descriptions that need to be made are the request and response messages for the Verify Ownership, Verify Electoral Registers and Verify Residence use cases.

You should keep the message descriptions separate from the use case descriptions, to support reuse. Refer to them from the use case descriptions instead.

Message Transformations

How message are transformed from one format to the other depends on the situation. For example, in case of BPEL, transformations can be done by doing one or more assign operations or by using an XSLT transformation, which in both cases may involve complex XPath queries or regular expressions.

For this reason transformations probably are best described in text, as has been done in the following example:

The example is trivial in that transformation consists of using a subset of the fields of the source and mapping those 1:1 on fields of the target message.

You can image than in practice often more complex transformations need to be done, for example in case of n:m mappings. In practice using a two-column format often suffices, where the (left) Source/Transformation column specifies which source fields are involved and any logic that needs to be applied to that, and where the (right) Target column specifies what the (single) target field is.

As with message formats you should keep the message transformations separate from the use case descriptions to support reuse.

Where to Stop

Once the analysis model has been worked out to a sufficient level of detail, you are ready for the design. What “sufficient” means in this context, depends on many factors, the most important ones being the following:

Nature of Engagement

In some cases a formal process needs to be followed that requires every change of the specifications to be approved up-front. On the other side of the spectrum there is the agile approach by which part of the (detailed) requirements are captured while validating iterations of a working program.

Skills and Habits of Developers

Developers are most comfortable with what they are used used to working with. However, you should be aware that when every developer involved needs to get used to one broadly accepted way of creating specifications almost always outclasses the situation in which developers need to get used to as many different styles as there are other developers. Not to mention the effort that needs to be put into the validation process involved with that.

For most projects that involve requirements analysis, class models are very useful to developers, even in the case of BPEL development.

Application and Technical Architecture

The development of data-oriented systems that depend on a well-structured database can highly benefit from creating a detailed class diagram. In case of use cases that involve a user interface, activity diagrams normally only add value when there is a complex dialog involved.

For SOA projects that primarily deal with messaging it probably suffices to detail classes to the level that all attributes and their types are known. Message formats and transformations often need to be worked out in detail. In general, activity or sequence diagrams add great value to business processes and services, as they support a more effective implementation.

Other architectures, for example identity management/security, on their turn require yet other details.

A final remark that needs to be made at this point is that use case analysis should not be confused for a technical design. Although some people state that class models, and activity diagrams that describe system-internal behavior, are “technical” they actually only capture detailed requirements or validate higher-level requirements from a different angle.

To be continued ....