In this article I discuss how to get users in an IAM group to support task reassignment from a custom task list. It stands by itself as a topic but is also part of the series of articles in which I discuss some challenges you may face when upgrading Oracle Integration Gen2 (OIC 2) Process (PCS) to Gen3 (OIC 3) Oracle Process Automation (OPA) applications. The previous article can be found here.

Disclaimer: The below provides a snapshot at the time of writing. Things may have changed by the time you read it. It is also important to mention that this does not necessarily represent the view of Oracle.

Specifically for PCS to OPA migration you are also referred to the following:

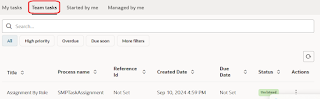

When building a custom task list application for Oracle Process Automation, you may need to implement functionality to reassign a task to someone else who is in the IAM Group associated with the swimlane role the task is in. The same functionality is also available in the out-of-the-box workspace.

In PCS (OIC 2) you could use the /ic/api/process/v1/identities/groups/{groupid}/users API for this. Being part of the API’s the security of that is the same as for all others, and you cannot do more than the API is designed for, which mainly is to retrieve users, groups, and roles. Oracle Process Automation (OIC 3) does not have the equivalent of the /ic/api/process/v1/identities API’s. Instead, you are supposed to use the IAM API’s.

The Challenge

Now here comes the challenge. To be able to populate a drop-down (or similar widget) with users you will call this API from the UI. To prevent the need of authorizing users to call this API with their own credentials, this is typically done using the OAuth 2.0 Client Credentials flow. This flow is then based on a client Confidential Application with at least the IAM User Administrator application role assigned to it, which allows adding, changing, and deleting users, groups and group memberships. Even though the UI may have been designed to only select users in a particular group, using browser developer tools, a more advanced user will be able to do any of those things. For most of my customers this is not acceptable from a security perspective and would block a go live of such an implementation.

The Remedy

Fortunately, the remedy is pretty straight-forward (albeit some work) as you can solve this by creating an Integration that serves as a proxy to IAM. It involves the following:

- Creation of a client Confidential Application in IAM with the User Administrator IAM application role assigned to it.

- Creation of a Integration Connection to call IAM REST API’s using the Client Credentials of the previous step.

- Creation of an Integration that returns the list of users in an IAM Group based on its display name.

1. Confidential Application Creation

The client Confidential Application is created in Cloud Console > Identity Security > Domains > [IAM domain associated with OPA] > Integrated Applications > Add Application.

The configuration looks as in the following screenshots. The parts indicated in red, is what you configure, the rest can be kept as defaulted.

2. Integration Connection Creation

The Connection in Integration is created as shown below. Mind the value of the ‘urn:opc:idm:__myscopes__’ scope, which needed to access the IAM admin API’s.

3. Integration Creation

Regarding the Integration there probably is more than 1 way to skin that cat, but I consider the following as a proper one.

The design looks as follows:

The /ic/api/process/v1/identities/groups/{groupid}/users takes the id of the IAM group as template (path) parameter. In the UI you don’t have a handle on that id and when promoting the application from one environment to the next, you probably deploy to a different domain at some point, and then that id will be different. But you can keep the group’s display name the same for all domains. Therefore, the interface of the Integration can be designed to look as follows:

Resource URI: /groups/{groupDisplayName}/users

Action: GET

Response:

{

"items" : [ {

"value" : "123xyz",

"display" : "John Doe",

"name" : jonh.doe@oracle.com

} ],

"count" : 1

}

The getGroupNameIAM activity in the Integration gets the group from IAM using its display name:

Resource URI: /Groups

Action: GET

Query Parameters:

- filter (String)

Response:

{

"Resources" : [ {

"compartmentOcid" : "ocid1.tenancy.oc1..xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"displayName" : "Sample Group",

"domainOcid" : "ocid1.domain.oc1..xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"id" : "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"urn:ietf:params:scim:schemas:oracle:idcs:extension:dynamic:Group" : {

"membershipType" : "static"

}

} ],

"totalResults" : 1

}

The ‘urn…’ element must be included for the Integration to interpret the response.

When mapping the groupDisplayName from the Integration’s request to this API you can use the following expression:

concat ('displayName eq "', /nssrcmpr:execute/nssrcmpr:TemplateParameters/ns21:groupDisplayName, '"' )

Configured like this should return exactly 1 group. Using that group’s id, the getUsersInGroupIAM activity gets all users in that group:

Resource URI: /Groups/{groupid}

Action: GET

Response:

{

"members" : [ {

"value" : "123xyz",

"display" : "John Doe",

"name" : "jonh.doe@oracle.com"

} ],

"urn:ietf:params:scim:schemas:oracle:idcs:extension:dynamic:Group" : {

"membershipType" : "static"

}

}

Again, the ‘urn…’ element in the response is just there for Integration to be able to interpret it. The mapping of it to the Integration’s response is pretty straight-forward:

The Default Fault Handlers is there to prevent the Integration from returning any detailed information from the backend, should it fail. I let it return a 401 in all cases. This being another best practice you should apply where it concerns security. The “not found” route returns a 404 when no IAM group can be found with the provided groupDisplayName.

Previous articles on the PCS to OPA migration subject:

- Part 1 Starting a Process Instance as a Webservice & Consuming SOAP Integrations

- Part 2 Multiple Start Events & Synchronous Process Start

- Part 3 /tasks API challenges

- Part 4 Process Instance and Task Properties

- Part 5 Multi-instance Embedded Subprocess, Current Date ('now'), Process Correlation

- Part 6 Receive and Message Start Interface

- Part 7 Task assignment to individuals

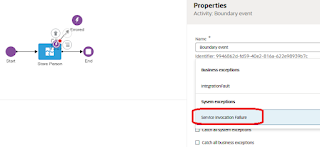

- Part 8 Boundary Error event handling