In my opinion, OIC Events is a generally overlooked but very useful feature. In this blog post I describe how it works, a couple of typical use cases (as I see them) and a few considerations when using it.

Basically, there are 2 different types of event-based messaging:

- Queues, which concerns a point-to-point model where the messages are delivered to a single consumer.

- Topics, which uses a publish/subscribe model where the producer broadcasts messages that can be consumed by multiple, subscribed consumers.

The main difference between event-based messaging on one hand and synchronous or fire&forget messaging on the other, is about coupling and scalability. In case of the first, messages can be produced even when no consumer is active, to be consumed later when the consumer does become active (guaranteed delivery). To prevent unconsumed messages from piling up, they typically expire after some time, so it is only guaranteed within that time-frame. Topics provide a looser coupled model than queues, as in case of a topic new subscribers can come and go. Event-based messaging interaction typically also scales better, as the consumer can handle messages in a sustainable pace even when there is a peak load in messages being produced.

Logically speaking OIC Events is an implementation of topics. So, the practical difference is this: when one Integration calls the other through an invoke (be that synchronous or fire&forget), then that other Integration must exist and be activated, otherwise the call will fail. In contrast, event-based Integrations are loose coupled, as in the only thing they share is the definition of the event that one publishes and the other subscribes to. Obviously, there is no point in publishing events when no Integration will ever listen, but the publisher should not care how many consumers there are nor whether any of those are activated at some point in time.

Over time the structure of an event can be changed as long as it stays to be compatible with the event the consumer is expecting. For example, you should not remove an element in the published event that any consumer relies on, but you can add elements as required for new subscribers. An existing consumer can be refactored to take up the new definition of an event at some later point.

Sample Implementation

The following describes a simple use case, and consists of 1 Event, 1 Connection and 4 Integrations:

The Customer Creation event looks like this:

It has one custom header element called "region". Header elements are typically used for filtering, as I will show later.

I only have a 1 REST connection of type Trigger, nothing special about that.

There is 1 SMP Customer Creation Publisher Integration that publishes the customer creation event, and looks like this:

This publisher is triggered while passing in customer data:

{

"address": "Main

Street",

"city":

"Amsterdam",

"postalCode":

"1000AA",

"name": "ACME",

"houseNumber": "1",

"region" : "North"

}

The Integration publishes the message and gives a (synchronous) response to indicate that publication was successful. As explained, this does not mean that it was consumed successfully, or that there is even a consumer activated.

Then I have 2 subscribers, the first being the SMP Customer Creator which consumes any message and then calls a SMP Customers integration to create the customer. Integrations that consume messages are asynchronous by definition and therefore don’t have a response.

The other subscriber SMP Customer Creation Notifier North consumes all messages where the “region” custom header element has value “North” and then sends an email to the customer representative of all the North region customers.

Message can be filtered based on header elements, using JQ expressions (JSON Query) https://docs.oracle.com/en/cloud/paas/application-integration/integrations-user/define-header-based-subscription-filtering.html

So, I also could have other subscribers for different regions that can have a different implementation, and even be in another project. I can also deactivate the subscriber for one region while keeping those for other regions active at the same time.

Typical Use Cases

Considering delivery is only “guaranteed” until the message expires, and guaranteed delivery is not the same as guaranteed execution, you should not use OIC Events when must-have downstream logic depends on it. When execution fails it may not be easy to recover from. Also, in case of multiple subscribers, it should be acceptable that messages are consumed in whatever order.

When both are of no concern, a typical use case is that of implementing logic that must be able to handle peak loads. You can imagine 1 integration that can be called many times in a short timeframe or fetches many rows at once from a database and instead of doing the processing itself it publishes a message for each individual call / row to be processed by some other subscribing Integration.

Another, related use case is to process logic in parallel. For example, after successful completion of some logic a group of users must be notified by email, while (in parallel) log events need to be persisted. The processing of one should not hinder the other.

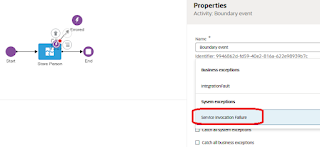

Which brings me to the typical case I use it for, which is custom logging, especially to persist data on error. You typically don’t want to stack error handling on top of error handling and as persisting the logging may fail on its turn, I rather publish a message than invoking some other Integration that may not be activated or work properly. Moreover, in case of errors related to high load you would not want to add insult to injury by topping this with even more load and immediately start yet another Integration instance. Publishing a message instead give the engine the opportunity to handle it when that is has room to do so.

Another case is that of extendable business logic, for example where you want to be able to add future logic without having to change anything to the existing logic. Like in my example when a new region is added.

Also, a typical use case is to start an Integration on file creation at the File Server. The File Server generates system events for file creation, deletion, download, and folder create and delete. An Integration can subscribe to any of these events. You can imagine that over time new system events are introduced, although no example pops into my head right now.

Considerations

When using OIC Events at this point in time there are the following considerations:

- There is a maximum of 50 integrations that can subscribe to (any) event per OIC instance. So if you plan to implement an event-driven architecture in combination with many Integrations, then OIC Events is not the way forward. You should OIC Streaming instead, which nicely integrates with OIC.

- You can only subscribe to a system event in case of an Integration in a Project.

- For deactivated subscribers, events are retained for a maximum of 24 hours.

- You can have a maximum of 10 custom headers.

- You can only publish to and subscribe from events within the same OIC instance. If you want to implement pub/sub use cases concerning multiple OIC instances, use OIC Streaming instead.