In a previous blog article I introduced the Microprocess Architecture. The article below discusses the consequences of maintaining, managing and running applications that are build according to this architecture.

We are applying the Microprocess Architecture to some 4 Dynamic Process applications that are being built with the Oracle Integration Cloud (OIC) for some one and a half year already, but none of them went production so far. We can clearly see the benefits of applying the Microprocess Architecture, but also realize the consequences. I have addressed most of these with some pointers for dealing with them in a follow-up article on the subject.

As the go-live date slowly but inevitably is nearing, people obviously start wondering what the consequences regarding maintaining, managing and running such applications mean in the context of OIC. The below discusses this, referring to a “business process” as the main application that is made up by a collection of microprocesses.

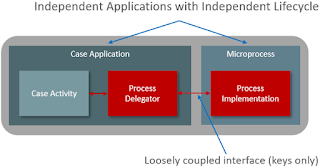

The following picture shows the core concepts of the Microprocess Architecture in the context of a case application:

The case application with its activities is separated from the components that do the "actual work": the microprocesses. The case application does only the choreography and therefore is restricted to rule logic. The microprocesses are relatively small and simple stand-alone process application doing service calls and handling human tasks.

As summary of the negative side of microprocesses compared to a monolith:

- Impact analysis will be more complex

- Deployment is more complex

- Analysis of an issue in the flow of a business process is more complex

As summary of the positive side of microprocesses compared to a monolith:

- Impact of change tends to be smaller

- They are easier to maintain in process composer

- Changes can be brought quicker to production

- Multiple developers can work on the same business process application

- The flow of a single microprocess instance is easier to analyze

- The memory footprint is smaller

- Little to no need for process instance migration

- Fewer revisions (versions) deployed

- Risk of issues with OIC upgrades is smaller

- Need to scale OIC instances will be less

The consequences are determined by three different aspects that are discussed first:

- Number of applications

- Complexity and size

- Passthrough time

After that these aspects are discussed regarding:

a) Design-time consequences

b) Run-time consequences (for individual applications)

c) Consequences on the infrastructure

1. Number of Process Applications

One obvious consequence of the Microprocess Architecture is that you will have many more components to deploy than for monolith process applications. Clearest example is that the top-level process is only doing orchestration (or as we should say in case of a Dynamic Case application: choreography), delegating all the ‘real work’ to multiple microprocesses that each concern a process application of its own.

One of the applications we create already consists of some 30 microprocesses, and still counting. At the end we plan to have some 5 applications and a couple of reusable “utility” applications (most of them consisting of just 1 microprocess). The current estimate is that we will end up with some 150 to 200 individual process applications, the majority of them being microprocesses.

2. Complexity and Size of Process Applications

Another obvious consequence is that each individual component is relatively simple and small. A microprocess can have many process activities in a complex flow with many gateways and so on, but should support one single business capability only.

For example, instead of one big sales process that starts with a customer request and only ends after the product has been delivered, you will end up with many smaller processes where one covers the intake of the customer request, another offers the quote, a third handles the signature and so on. The signature microprocess may support different scenarios, from getting a signature of one customer up to a whole board of directors, with approval patterns, reminders and escalation, and therefore can be quite complex. But in the end it has one single responsibility which is to get a contract signed. So, a microprocess is not necessarily always simple but by definition limited in scope.

3. Throughput Time of Process Instances

The fact that a microprocess is relatively small, implies that the passthrough time of its instances will be relatively short. The only instance that runs from start to end is the top-level process orchestrating it all. However, in some cases even that can be chopped up, like into a few smaller successive steps, where each step covers a major phase that triggers the next one and then stops.

For example, the top-level process of a sales process could be chopped up in one part covering the intake and quotation, which then triggers one or even more instances that cover the delivery of the products.

Longer running microprocesses that are typically those involving human activities, or activities handling asynchronous interaction with other applications or external parties. But there will not be many of this kind of activities in one single microprocess. For the case applications we are building, most of the instances live only a few minutes or less, and some up to a couple days, and only a few may run for a longer period (typically while waiting for a response from an external party).

In the following a monolith process application is compared with one that is completely build using the Microprocess Architecture. In practice you probably don’t have either one of the extremes.

a) Consequences Design-Time (Process Composer)

OIC currently has no out-of-the-box support for identifying relationships between process applications (let alone to visualize them), other than offering an API that exposes process definitions. For example, /ic/api/process/v1/dp-definitions/{id}/metadata shows all stages and activities in those stages (“plan items” are they called, but not which microprocess an activity is calling).

In case of a monolith application, the main and all subprocesses involved are in one and the same process application. It may not always be clear which (sub)process is calling which other, but you don’t have to look far. There also is only one single process application to deploy a business process to production.

On the negative side

In case of microprocesses it quickly becomes difficult to understand how a process application is constructed, as: which microprocesses make up the total application and how do they call each other. This complicates impact analysis.

Because a business process application is split into multiple microprocesses, you also deploy multiple process applications to production. This complicates overall deployment of one single business process.

On the positive side

Each individual microprocess application will be less complex and therefore easier to maintain than a monolith would be. Among others, a microprocess is expected to open quicker in Process Composer and activation will be faster.

This comes on top of the advantages mentioned before:

- In many cases a change is localized to one microprocess, so impact of a change tends to be less.

- As a change is applied to a component with limited functionality you typically can bring changes much quicker to production, making you time-to-market quicker.

- You can work with more than one developer at the same time on one business process application (only one person can modify a process application at the same time).

b) Consequences Runtime

OIC currently has no out-of-the-box support where one can easily navigate from one process instance to the other, let alone a way to show the overall flow of a business process. When a process instance is paused its state is persisted in a database (this is called dehydration) and the runtime memory consumed is released. As soon as it resumes the state is retrieved from the database (this is called hydration) and will start consuming memory again.

In case of a monolith application with decomposition done using reusable subprocesses, the execution of a business process is presented as one flow from front to end. You can review a sub-flow execution by expanding the call node of the invoke to the subprocess.

On the negative side

In case of microprocesses an end-to-end flow of what functionally concerns one business process, may involve many individual running instances interacting with each other. Because there is no simple navigation from one instance to another, issues with performance or other runtime issues can become very difficult to analyze, taking considerably more time. It should also be noted that starting an instance of the next process is expected to cost more bootstrapping time than activating a subprocess in the same instance.

On the positive side

The simpler a process application is, the easier it will be to analyze its flow. It therefore will be easier to pinpoint an issue in an instance of a microprocess. The process payload of a monolith instance will be bigger, and it will be hydrated more often and longer. In contrast, the memory footprint of all the hydrated instances of microprocesses for one business process flow is expected to be smaller.

Due to the shorter passthrough time, on top of the advantages mentioned before comes the following:

- Except for the top-level process, there will be little to no need for microprocess instance migration (i.e. migration of a runtime instance from one version of the application to the next one).

c) Consequences on Infra

The more process revisions (versions) of applications to deploy, the longer it takes the OIC instance to start up as each individual revision will have to be (re)deployed. While CPU and memory consumption of an OIC instance continues to increase, you may reach a point where the instance may have to be scaled up by adding more nodes to it.

On the negative side

Especially in the beginning there will be many more application revisions to deploy in case of a Microprocess Architecture. In case of an upgrade of OIC that involves a change of the process application code in the background, there will be many more application revisions to upgrade.

On the positive side

In case of a monolith all changes are applied to the same application, instances run much longer, and may not always be migratable. You therefore should expect you must keep many more revisions deployed. As pointed out in the article introducing the Microprocess Architecture, the small passthrough time of a microprocess implies that obsolete revisions can be decommissioned pretty quick. Except for the top-level one, it is expected you typically need to keep 1 to 2 activated revisions per microprocess (*). It is difficult to predict if rebooting an OIC instance after a while would take more or less time than in case of a monolith.

As the individual microprocess applications are relatively simple, it is expected that the risk of issues with a code upgrade as the result of an OIC upgrade is smaller. And when there is an issue it probably is easier to fix.

Because the smaller footprint of microprocesses, the need to scale up the infra is expected to be limited. Performance issues with one microprocess impacting the other ones can be addressed by distributing microprocesses over different OIC instances.

(*) Currently OIC has a limitation that forces you to keep a revision deployed if it was part of the execution of any running business process flow. If you deactivate it while that is the case, the complete flow will be aborted.